Because two separate samples are taken to compute an F-score and the samples do not have to be the same size, there are two separate degrees of freedom - one for each sample. There are rows and columns on each F-table, and both are for degrees of freedom. First notice that there are a number of F-tables, one for each of several different levels of α (or at least a table for each two α’s with the F-values for one α in bold type and the values for the other in regular type). If more or less chance is wanted, α can be varied. The usual choice is 5 per cent, or as statisticians say, “ α –. Before using the tables, the researcher must decide how much chance he or she is willing to take that the null will be rejected when it is really true. Using the F-tables to decide how close to one is close enough to accept the null hypothesis (truly formal statisticians would say “fail to reject the null”) is fairly tricky because the F-distribution tables are fairly tricky. The second is that there is a difference, and it is called the alternative, and is denoted H 1 or H a. The first is the null hypothesis that there is no difference (hence null). Formally, two hypotheses are needed for completeness. There are two sets of details: first, formally writing hypotheses, and second, using the F-distribution tables so that you can tell if your F-score is close to one or not. The basic method must be fleshed out with some details if you are going to use this test at work. If the F-score is far from one, then conclude that the populations probably have different variances. If the F-score is close to one, conclude that your hypothesis is correct and that the samples do come from populations with equal variances. Compute the F-score by finding the ratio of the sample variances. Hypothesize that the samples come from populations with the same variance. If you compute the F-score, and it is close to one, you accept your hypothesis that the samples come from populations with the same variance. Because the F-statistic is the ratio of two sample variances, when the two sample variances are close to equal, the F-score is close to one. Obviously, if the two variances are very close to being equal the two samples could easily have come from populations with equal variances. You would have two samples (one of size n 1 and one of size n 2) and the sample variance from each.

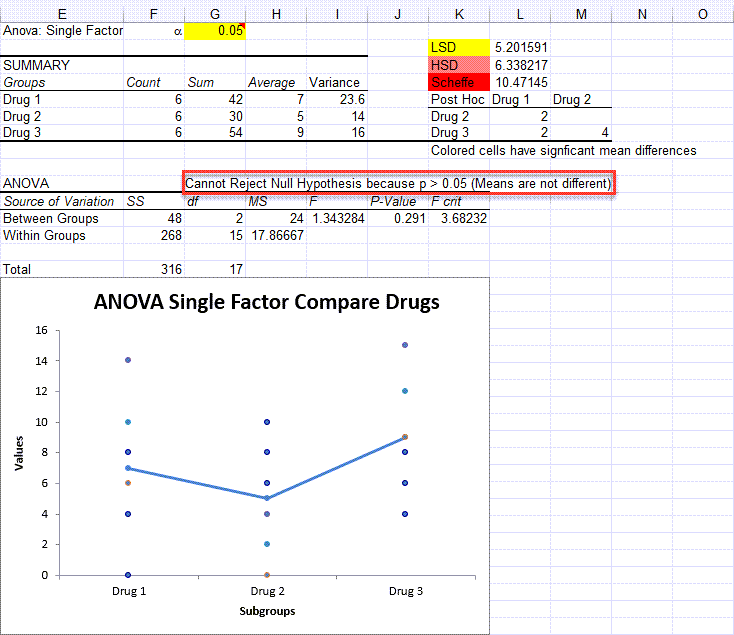

A simple test: Do these two samples come from populations with the same variance?īecause the F-distribution is generated by drawing two samples from the same normal population, it can be used to test the hypothesis that two samples come from populations with the same variance. The second is one-way analysis of variance (ANOVA), which uses the F-distribution to test to see if three or more samples come from populations with the same mean. The first is a very simple test to see if two samples come from populations with the same variance. There are two uses of the F-distribution that will be discussed in this chapter. Since F goes from zero to very large, with most of the values around one, it is obviously not symmetric there is a long tail to the right, and a steep descent to zero on the left. It is equally possible for s 2 2 to be a lot larger than s 1 2, and then F would be very close to zero. If s 1 2 is a lot larger than s 2 2, F can be quite large. Thinking about ratios requires some care.

All of the F-scores will be positive since variances are always positive - the numerator in the formula is the sum of squares, so it will be positive, the denominator is the sample size minus one, which will also be positive. If s 1 2 and s 2 2 come from samples from the same population, then if many pairs of samples were taken and F-scores computed, most of those F-scores would be close to one.

Think about the shape that the F-distribution will have. Because we know that sampling distributions of the ratio of variances follow a known distribution, we can conduct hypothesis tests using the ratio of variances. Not surprisingly, over the intervening years, statisticians have found that the ratio of sample variances collected in a number of different ways follow this same distribution, the F-distribution. Years ago, statisticians discovered that when pairs of samples are taken from a normal population, the ratios of the variances of the samples in each pair will always follow the same distribution.

0 kommentar(er)

0 kommentar(er)